The various RAID types (levels) store data differently, focus on different things (fault tolerance, throughput, cost), and as such, are suited for different situations. And there’s also the matter of RAID’s effectiveness in this current day and age. With the advent of alternative technologies like SSDs and Erasure Coding, the question looms; is RAID still worth it? We’ll discuss all these and similar topics in this article.

What is RAID? How Does It Work

RAID is a storage technology used to configure multiple physical disks as a single logical disk called a Logical Unit Number (LUN). Generally speaking, in a RAID setup, data is stored across multiple disks, with the primary objective of data redundancy and/or performance improvement. Data redundancy increases reliability by acting as a safety net against disk failure. Similarly, combining the specs of multiple disks provides significant performance boosts for I/O operations. Of course, this is only a broad description. The degree to which the array focuses on redundancy or performance is dependent on the type of RAID used, typically referred to as RAID levels. Some are designed for one exclusive purpose, while others offer the best of both worlds. The Standard RAID Levels are RAID 0 – RAID 6, but there are numerous other levels of RAID that fall under categories like Nested RAID and Non-Standard RAID. We’ll talk about all these in further detail later, but for now, let’s just end it with a brief introduction. As for how RAID actually works, it implements techniques like data striping, disk mirroring, and parity. Depending on the RAID level, one or any combination of these techniques could be used. Data striping is the process of splitting consecutive segments of logically sequential data on different disks. By concurrently accessing the data spread across multiple disks, you get to utilize the combined data throughput, which basically leads to improved performance. Mirroring is self-explanatory – the data from one disk is copied onto another. This makes the data on one disk redundant, but it’s intended as this allows data to be recovered in case one of the disks in the array fails. Parity is an error protection technique commonly used to provide fault tolerance and achieve redundancy. Parity data is either stored on a dedicated disk or spread out across all the disks, and when any disk in the array fails, you can swap it out for a fresh disk and use the parity data and data from the other disks to rebuild the lost data. Generally, basic XOR is performed on the disks’ data to calculate the parity data, but certain RAID levels like RAID 2 or RAID 6 use dedicated parities, which we’ve talked about further in the article. Finally, there’s the matter of RAID implementation. The RAID array can be managed either by a dedicated RAID controller, software-based implementations (md, ZFS, etc.), or firmware and driver-based implementations. Physical RAID controllers can be pricy, while software controllers are free or affordable. However, as software controllers are dependent on the host machine for resources, the RAID performance boost is impacted as well. Generally, RAID 0 – 4 can be managed with software controllers, while RAID 5 and higher levels require a physical RAID controller.

Standard RAID Levels

The RAID levels have evolved throughout the years, but the currently accepted standard, as maintained by SNIA, categorizes RAID 0 – 6 as the standard levels.

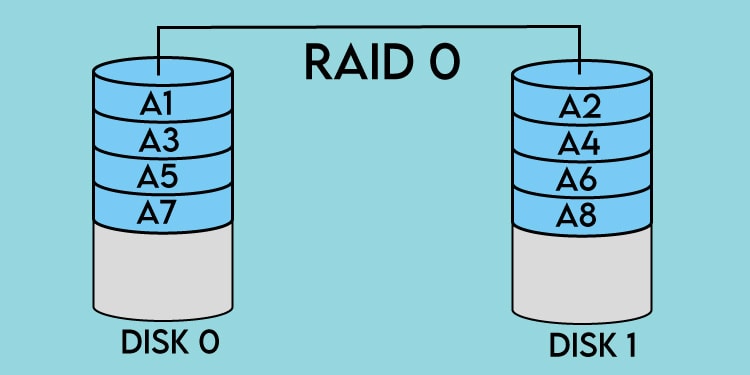

RAID 0

RAID 0 uses striping across atleast two disks. As the files are spread across multiple disks, the available throughput is multiplied as well. But by the same token, it’s also much more prone to failure, as any drive failing would mean that all the data is lost. As RAID 0 provides no redundancy, some argue if it should even be called RAID. As the risk of failure is high, it isn’t used very often, particularly with a large number of disks, as the chance of failure would be even higher. But it does still have its use cases. For scenarios where you only care about performance, like gaming, RAID 0 can be useful. In a RAID 0 setup, the total storage capacity is the sum of all the disks used. For instance, if two 1 TB disks are used, the available space would be 2 TB.

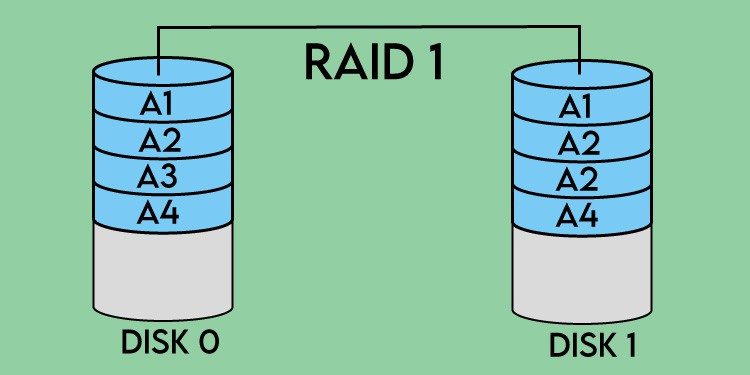

RAID 1

RAID 1 mirrors the data from one disk onto the other disks in the array. The selling point of RAID 1 is its reliability, as the array won’t fail as long as atleast one drive is operational. However, as it’s designed for reliability, other factors like performance and usable storage suffer. As data can be read from any of the disks in the array, the read performance is generally close to the fastest drive in the array. The same can’t be said for write performance, as all write changes must be applied to all disks. By design, the usable storage can also only be as large as the smallest disk in the array. For instance, if a 500 GB and 1 TB drive are used, the usable space would be 500 GB, and 500 GB would be used for mirroring, while the other 500 GB would remain unused.

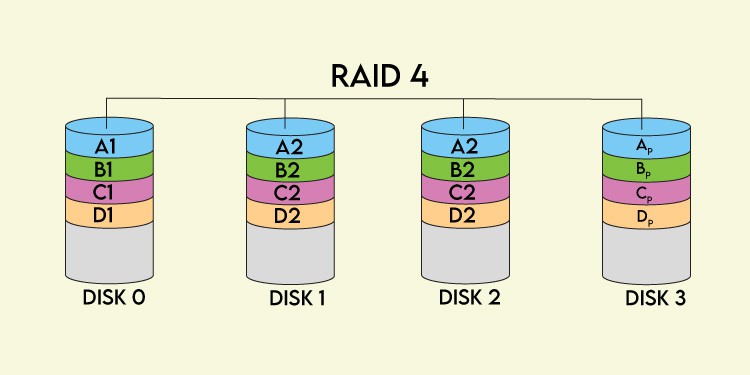

RAID 2, 3, 4

RAID 2 uses bit-level striping across disks with dedicated Hamming code parity. Basically, each sequential bit is stored on a different drive, while atleast one disk is used to store parity data. As most modern storage disks include Error Correction Code (ECC), RAID 2 is no longer used. RAID 3 is similar, but it uses byte-level striping instead, while a dedicated disk is used to store parity data. RAID 3 is rarely used as well, as it was replaced by RAID 4. RAID 4 uses block-level striping with a dedicated parity disk. As the blocks of data are spread out across disks, read requests can be serviced by multiple disks allowing for overlapping I/O. While RAID 4 does have its advantages over RAID 2 and 3, like I/O parallelism, it’s not commonly used either, as higher levels like RAID 5 and 6 are largely preferred.

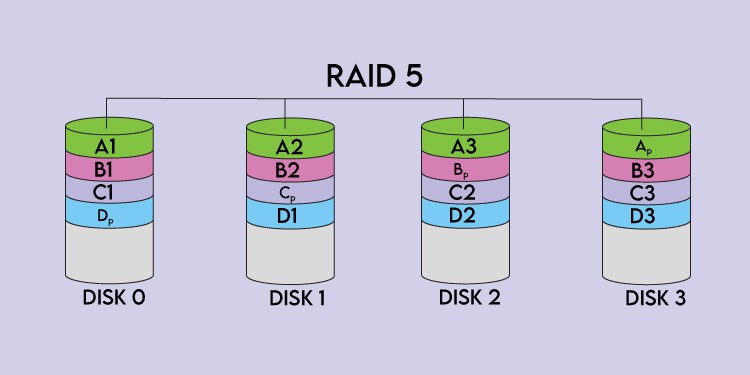

RAID 5

RAID 5 uses block-level striping with distributed parity, where the parity data is striped across each disk. RAID 5 requires atleast 3 disks, and even if one drive fails, the distributed parity can be used to rebuild the array. RAID 5 essentially provides both performance and redundancy benefits. Writing parity data does have some impact on write performance, but it’s not as stressful as the lower RAID levels, as all the disks in the array are able to service write requests in RAID 5. For all its qualities, RAID 5 does have one major flaw: its susceptibility to failure. When one disk fails, rebuilding the array can take multiple hours or even days. But the even bigger issue is that reconstructing the array requires the data from all the disks to be read, creating the possibility of a second drive failing, which would lead to complete data loss. Two drives failing at once is very unlikely, but it’s still something to consider when deciding on your setup. As such, for optimal reliability, it would be better to stick with the higher RAID levels that we’ll discuss below.

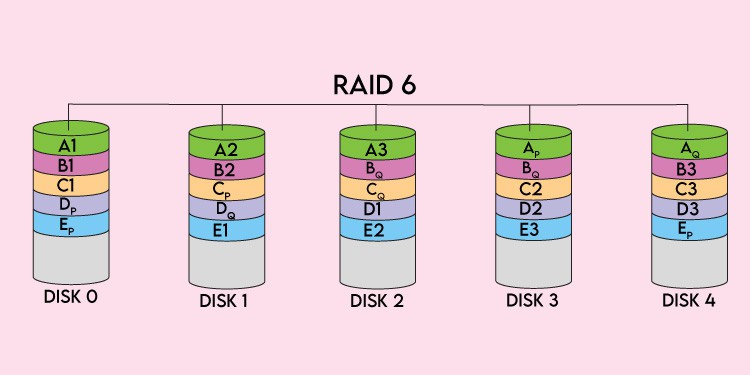

RAID 6

RAID 6 is similar to RAID 5 in that it uses block-level striping with distributed parity, but there’s one critical difference; it uses two parity schemes instead of one. The double distributed parity allows the array to remain operational despite two disk failures. The extra failsafe does come at an obvious cost, the write performance is impacted, and double the space is used to store parity data. In the case of a small array like 4 disks (the minimum for RAID 6), you’re free to choose between RAID 5 or 6 depending on whether performance or reliability is the priority. But with larger arrays, it’s highly recommended to stick with RAID 6. Of course, even RAID 6’s reliability isn’t failproof. As disk sizes grow, so do the rebuild times and, with them, the probability of disk failure. RAID 6’s effectiveness is waning, and soon triple-parity may be necessary.

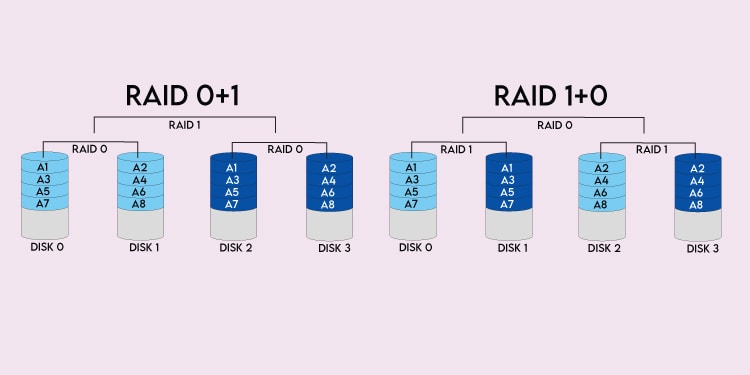

Nested RAID Levels

Nested RAID or Hybrid RAID is basically a combination of different RAID levels. These are used for additional redundancy, performance gain, or a combination of both. For instance, with RAID 10 (RAID 1+0), where 0 is the top array, a series of disks are mirrored first, then the mirrors are striped. RAID 01 (RAID 0+1) is the opposite, where 1 is the top array. Here, the data is striped across the disks first, then the set is mirrored. It’s the same idea with other nested RAID levels like RAID 03, RAID 50, RAID 60, etc. Nested RAID generally doesn’t go deeper than one level, but there are exceptions like RAID 100 (RAID 10+0), where RAID 10 arrays are striped using RAID 0. And while we won’t go into detail about them, it’s also worth mentioning that non-standard RAID levels exist. These are mostly proprietary technology designed with a particular organization or project in mind. Some examples include Linux MD RAID 10, RAID-Z, and Hadoop.

RAID Usage and the Future

It’s no secret that the future of RAID, as things stand, is finite. Technologies capable of better data protection, performance, and reliability, like Erasure Coding and SSDs, have emerged in recent years. With increasing disk sizes, the rebuild times (downtime) and the chance of failure when rebuilding also rise. And while multiple disks shouldn’t fail together in theory, exposing the same make drives to the same environmental conditions can make this much more likely than most people think. However, that’s not to say that RAID is already outdated. In enterprise server environments with large arrays where uptime is important, and just in general, when you need to configure your system in the most efficient way, RAID is still very relevant. With that said, there are a few things to keep in mind if you’re looking into RAID setups. First, redundancy is not the same thing as backups. RAID helps with but doesn’t guarantee data protection. It’s still susceptible to human error or viruses, and in case the disks are lost for whatever reason, having an offsite backup can be a lifesaver. Second, if you exclusively value performance, you could go with RAID 0. RAID 1 is great for redundancy, while something like RAID 5 offers the best of both worlds. If you value reliability more, which is important as the array grows larger, RAID 6 or nested RAID levels would be even better options, but they come at an additional cost as more disks are required for the higher RAID levels. Ultimately, it’s just a matter of finding the best balance of performance, protection, and cost for your specific circumstances.